Upcoming Events

AI Gala 2025

Join us for an extraordinary evening at the stunning Oxford Natural History Museum, where the worlds of academia and industry converge to explore the transformative power of Artificial Intelligence. This Gala brings together professionals, researchers, and entrepreneurs working at the forefront of Artificial Intelligence. Expect string quartets, great food, industrial demos, interesting talks, and a dance floor. It’ll definitely be a night to remember. For more information, please visit the AI Gala website.

OxAI Mini Conference

What is the OxAI Mini-Conference?

The OxAI Mini-Conference is a student-organized event. It's an opportunity for students across disciplines to come together, share ideas, and ignite conversations around AI's impact on our studies.

Mentorship Program Kickoff Session

Mentorship Program Kickoff Interest Form

The Oxford AI Society (OxAI) welcomes you to hear more about our third annual mentorship program!

Practical tips and lessons learned from building AI tools as a solo dev

By Josh Lawman, founder of translatethisvideo.com

Bio:

Josh Lawman is a developer who builds AI tools. He is currently working on Translate This Video (translatethisvideo.com) which is a video translation and dubbing service. Before his current role, he led a group of data scientists in California building optimization tools for an ad tech system."

Abstract: This talk delves into the challenges faced when building generative AI products in a solo developer (or small team) context. Its focus is practical in nature and aimed at those who are eager to leverage generative AI models in their own project or startup. The talk will go over the lessons learned throughout the creation of a handful of AI tools, including a video dubbing and translation tool. Come for advice on building playable AI demos that look good, leveraging publicly available models, and getting the most out of language models in a developer context."

Date: 12 Feb 2024

Time: 5:30 PM - 6:30 PM

Location: Teaching Room (277), Oxford e-Research Centre

The Technical Founder’s Roadmap

Oxbridge AI Challenge Event

Entrepreneurship Hub at OxAI

The Technical Founder’s Roadmap

In this talk, Harry Berg, a successful Y Combinator alumni, will outline a step by step process for you to follow to develop the practical skills behind building and launching products to turn your ideas into reality. Learn about the AI bootcamp he found and used that helped him scale his startup from nothing to 30 full time employees without any outside funding.

Date: Thursday 8th February

Time: 06:30 PM to 08:30 PM

Location:

Keble Road Entrance: 7 Keble Road, Oxford e-Research Centre;

Parks Road Entrance: Department of Computer Science, Wolfson Building, Parks Road, OXFORD, OX1 3QD

OxAI Social

YOU ARE INVITED to the first OxAI social in 2024! Join us for an unforgettable evening with inspiring AI conversations. This is the best time to connect with like-minded individuals who share a passion for cutting-edge technology.

Date: Wednesday Feb 7th

Time: 5-8pm

Location: Lamb and Flag

AI Panel and Q&A

The Oxford Founders and OxAI will be hosting Nigel Toon from Graphcore and Andrey Kravchenko for an AI panel event and Q&A followed by networking drinks.

Time: 5th Feb, 18:30 - 21:00

Location: OeRC Teaching Room (Room 277), Oxford e-Research Centre, 7 Keble Road.

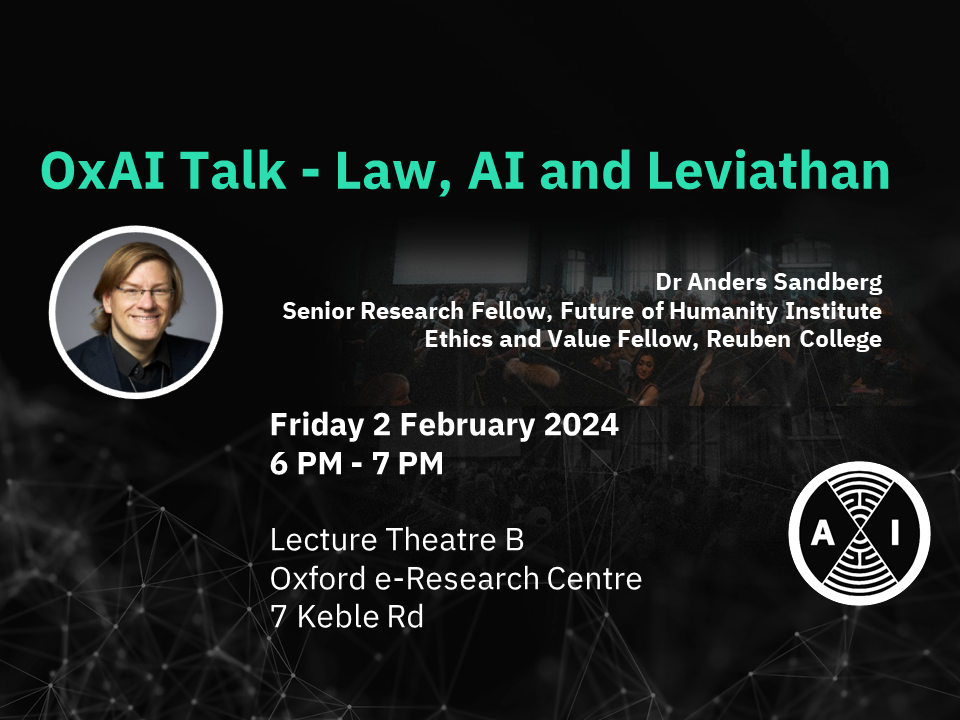

OxAI Talk: Law, AI and Leviathan by Dr Anders Sandberg

OxAI Talk Event: Law, AI and Leviathan by Dr Anders Sandberg

Date: 2nd Feb Fri

Time: 6 PM - 7 PM

Location: Lecture Theatre B

Parks Road Entrance: Department of Computer Science, Wolfson Building, Parks Road, Oxford, OX1 3QD,

Keble Road Entrance: Oxford e-Research Centre, 7 Keble Rd

Abstract:

What are legal systems? One way of thinking about them is as a form of extended cognition for solving tricky coordination problems in society, giving us peace and prosperity (and power to some). It is a kind of "AI'' platform running on human minds and pieces of paper, powered by human autonomy. This is also true for many other institutions like markets. But real AI seems able to perform many functions going into such institutions - at first by automating simple decisions or low-level processing, but plausibly gradually being able to solve the coordination problems far better than humans. If we want to get the goods, it is rational to hand over more and more to AI. But even if we solve the alignment problem well, we may have another problem: human autonomy is no longer necessary, and may actually be suboptimal. That appears worrisome. I will discuss whether there is a way out of this conclusion, and invite a conversation about how to design ahead to handle such "second order alignment" problems.

Bio: Dr Anders Sandberg has a background in computer science, neuroscience and medical engineering. He obtained his PhD in computational neuroscience from Stockholm University, Sweden, for work on neural network modelling of human memory.

Since 2006, Anders has been at the Future of Humanity Institute (in the Faculty of Philosophy) where his work centres on management of low-probability high-impact risks, estimating the capabilities of future technologies, and very long-range futures. Topics of particular interest include machine learning, ethics, global catastrophic risk, cognitive enhancement, future studies, neuroethics, and public policy.

He is research associate to the Oxford Uehiro Centre for Practical Ethics, the Institute for Future Studies (Stockholm) and the Center for the Study of Bioethics (Belgrade). He often debates science and ethics in international media.

Managing AI Risks in an Era of Rapid Progress

When: 30th January, 6 - 7 PM, followed by a social.

Where: Department of Statistics

Abstract:

Jan will outline risks from upcoming, advanced AI systems. They will then examine large-scale social harms and malicious uses, as well as an irreversible loss of human control over autonomous AI systems. In light of rapid and continuing AI progress, they propose urgent priorities for AI R&D and governance.

Yoshua Bengio: Towards Quantitative Safety Guarantees and Alignment

Abstract:

This presentation will start by providing context for avoiding catastrophic outcomes from future advanced AI systems, in particular on the need to address both the technical alignment challenge and the political challenge of coordination, at national and international levels. In particular, Prof. Bengio will discuss the proposal of a multilateral network of publicly funded non-profit AI labs to work on alignment and preparing for the eventual emergence of rogue AIs, presented in my Journal of Democracy paper. They will then outline a machine learning research program to obtain quantitative and conservative risk evaluations to address the alignment challenge. This is based on amortized inference of Bayesian posterior over causal theories of the data available to the AI, using generative AI to approximate that posterior and mathematical methods developed recently around generative flow networks (GFlowNets).

Sign up here to attend in-person: https://forms.gle/jskeGfsoziiWLnzC7

Sign up here to attend virtually: https://forms.gle/V4c2DJfCQRBH73io8

Generative AI & Large Language Models (LLMs) in Financial Services

Overview of the newest LLM External AI Models and Datasets, General Industry Information and AI Products.

More Artificial than Intelligent: Is the AI hype blinding scientists?

Date: 11/23/2023

Time: 5pm-6pm

Location: Teaching Room (277) at Oxford e-Research Centre, 7 Keble Road, Oxford.

Artificial Intelligence is not new, and it did not start impacting our lives directly only when chat GPT was released. But the wide social focus on these technologies does impact the way that scientists perceive them and engage with the technology. In my sub-field of astronomy I have been exposed to new models, new prototypes, promising a solution to how we automatically classify cosmic explosions. Years of new promises have failed to deliver a compelling product and since I have started working on this topic as a Schmidt AI in Science Fellow, I am starting to uncover why.

In this talk I want to present some of the issues I am seeing in how science can be dazzled by the AI mania, and I want to discuss how we can disentangle the true potential ML Technologies from the promises made to investors and media outlets, so that we can harness these new tools for Science in a reliable and sustainable way.

An Introduction to Information-Theoretic Bounded Rationality

For this week’s speaker event we will be hosting Pedro Ortega as he gives his talk on “An Introduction to Information-Theoretic Bounded Rationality”. Come down to the statistics department at 6pm on Tuesday the 7th to hear what he has to say - all followed by a free pizza social immediately afterwards!

Bounded rationality, the art of decision-making with limited resources, is an important challenge in AI, neuroscience, and economics. This talk reviews an information-theoretic formalization that offers a sharp characterization.

The theory champions the free energy functional as the go-to objective function. This functional excels for three reasons: it narrows the solutionspace, enables Monte Carlo planners that are precise without exhaustivesearch, and encapsulates model uncertainty arising from insufficient evidence or interactions with agents of uncertain motives.

We delve into the realm of single-step decision-making and demonstrate its expansion to sequential decisions through equivalence transformations. This broadens our scope to a vast array of decision problems, capturing both classical decision rules (like EXPECTIMAX and MINIMAX) and more nuanced, trust- and risk-sensitive planning approaches."